[논문 Summary] GaussianDreamer (CVPR 2024) "GaussianDreamer: Fast Generation from Text to 3D Gaussians by Bridging 2D and 3D Diffusion Models"

[논문 Summary] GaussianDreamer (CVPR 2024) "GaussianDreamer: Fast Generation from Text to 3D Gaussians by Bridging 2D and 3D Diffusion Models"

논문 정보 (Citation, 저자, 링크)

Citation : 2024.10.28 월요일 기준 38회

저자 (소속) : ( ) [ Huazhong University of Science and Technology, Huawei Inc. ]

논문 & Github 링크 : [ Official ] [ Arxiv ] [ 공식 Github ] [ Project ]

논문 Summary

0. 설명 시작 전 Overview

1. Introduction

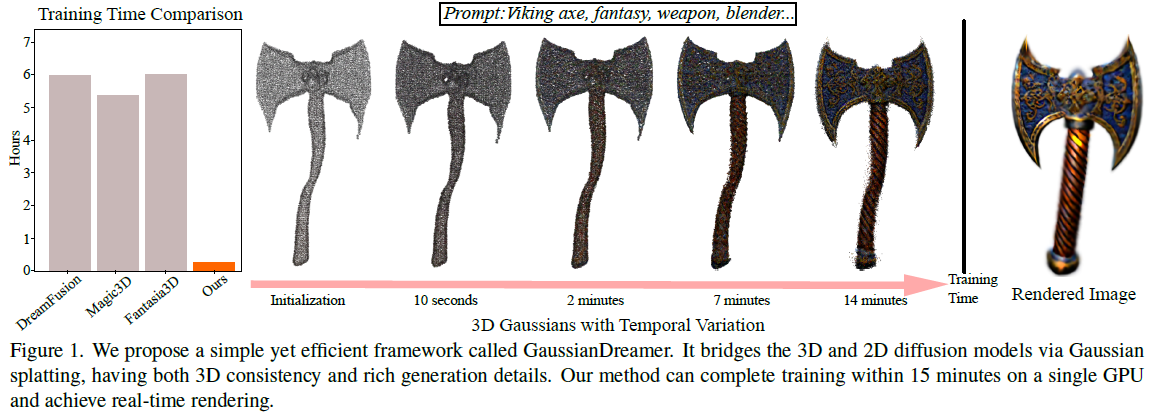

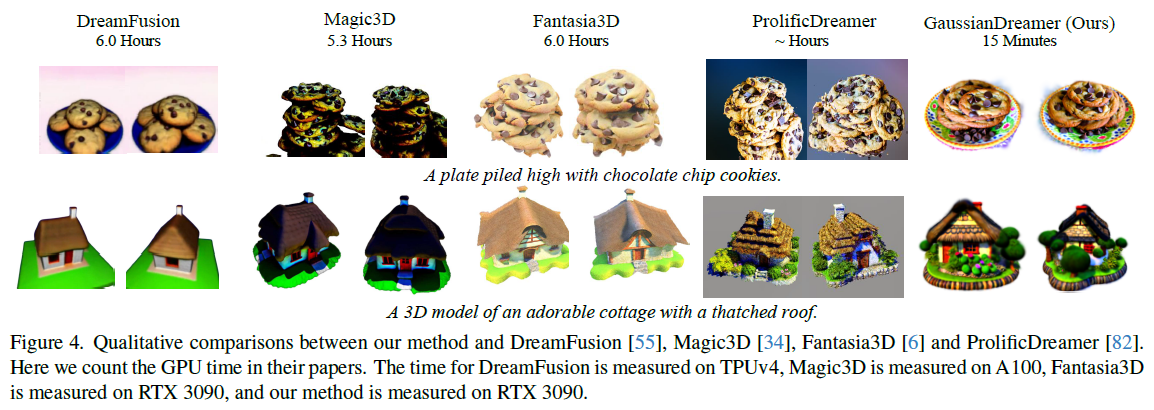

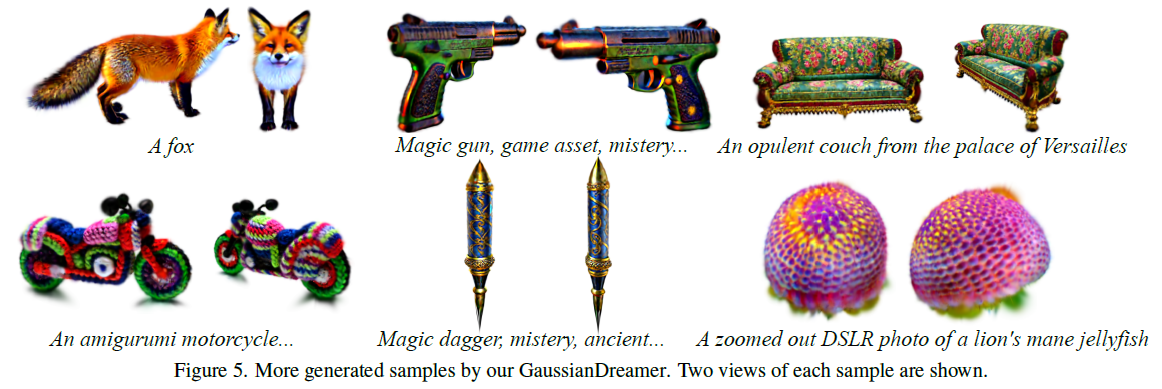

본 논문은 3D diffusion model / 2D diffusion model / Gaussian Splatting을 통해 빠른 속도로 훈련 가능한 3D 모델을 소개한다.

Our contributions can be summarized as follows.

• We propose a text-to-3D method, named as Gaussian- Dreamer which bridges the 3D and 2D diffusion models via Gaussian splitting, enjoying both 3D consistency and rich generation details.

• Noisy point growing and color perturbation are introduced to supplement the initialized 3D Gaussians for further content enrichment.

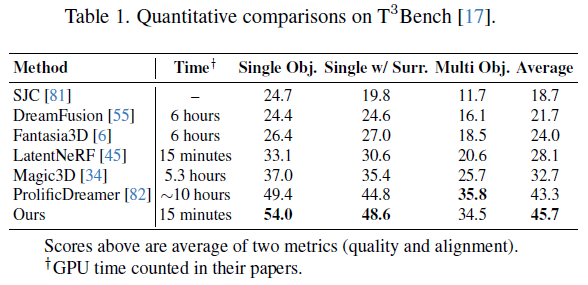

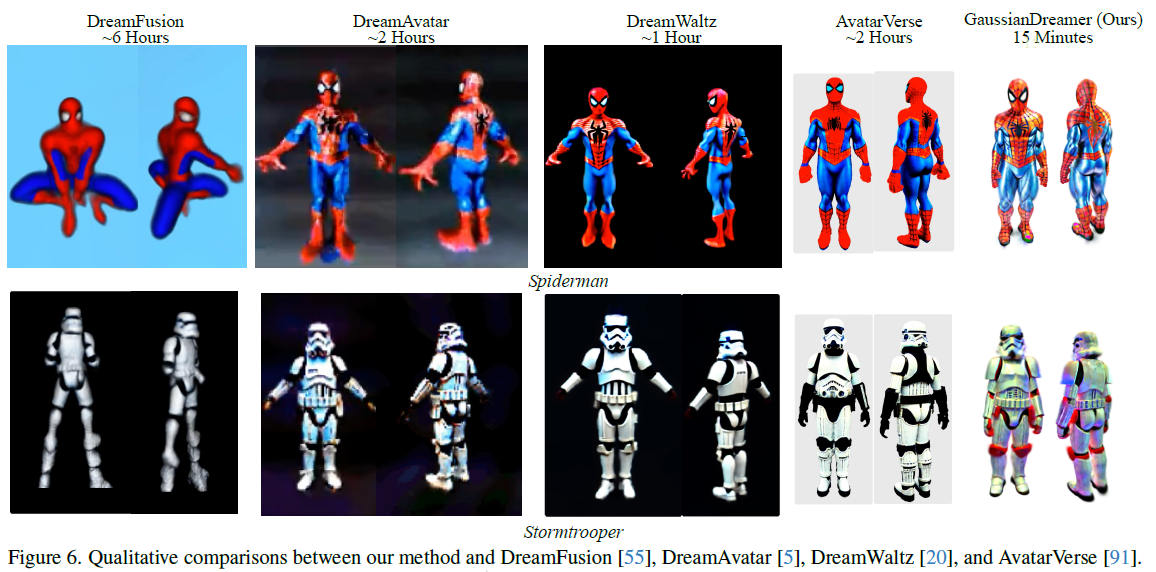

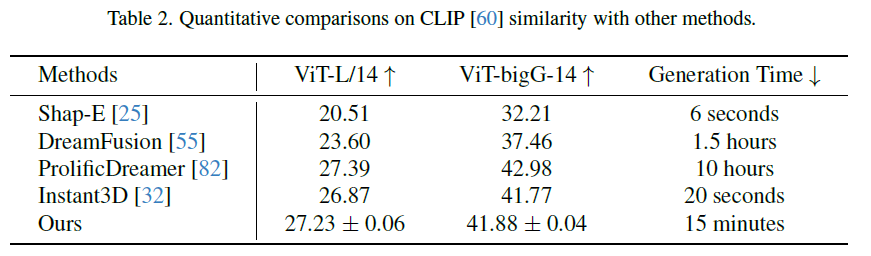

• The overall method is simple and quite effective. A 3D instance can be generated within 15 minutes on one GPU, much faster than previous methods, and can be directly rendered in real time.

3. Methods

3.1 Preliminaries

DreamFusion : https://aigong.tistory.com/652

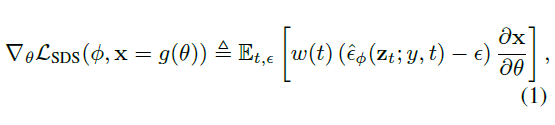

Score distillation Sampling (SDS) loss : 2D diffusion model score function을 활용한 3D generation 가능한 loss

MipNeRF 사용

3D Gaussian Splatting

real-time이 가능한 splatting 기반 image rendering 방법

anisotropic Gaussian 집합을 통해 구성

rendering process

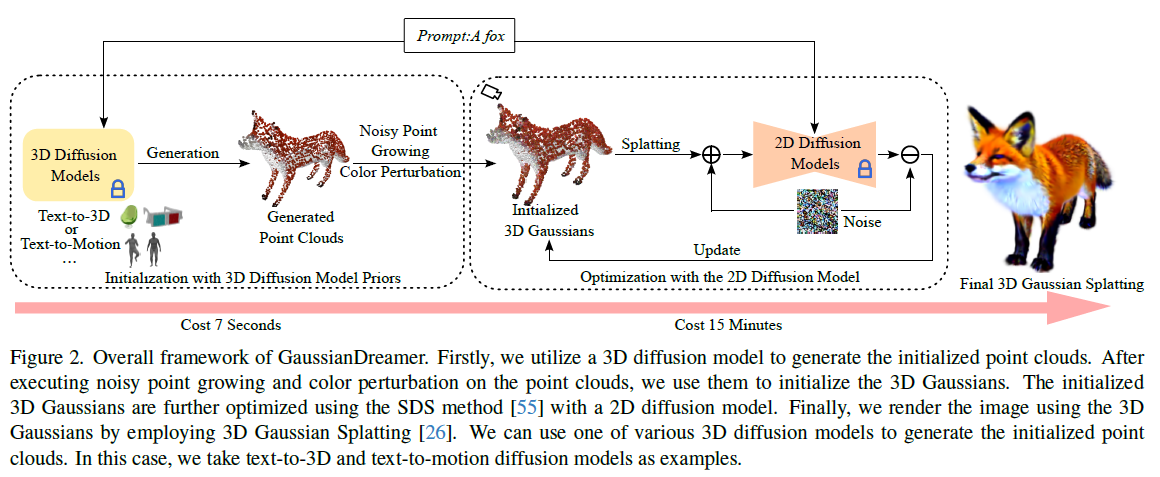

3.2 Overall Framework

(1) 3D diffusion model(text-to-3D & text-to-motion diffusion models)을 통해 initialization

(2) noisy point growing and color perturbation

(3) 2D Diffusion model을 통해 optimization (SDS)

(4) Gaussian Splatting을 통한 real-time rendering

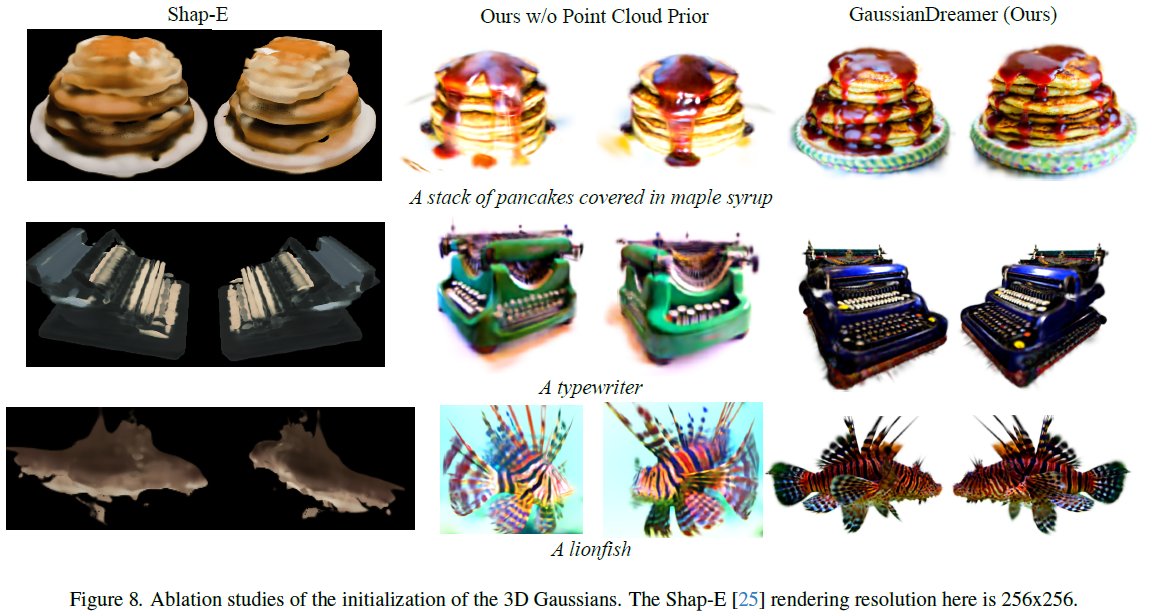

3.3 Gaussian Initialization with 3D Diffusion Model Priors

3.3.1 Text-to-3D Diffusion Model

3D 생성 모델을 통한 SDF & texture color 예측

triangle mesh $m$ 생성 : query SDF value at vertices -> query texture colors at each vertex of m

$pt_m(p_m, c_m)$ : m의 vertices와 color를 point clouds로 변환

$p_m$ : position of the point clouds. vertice coordinated of m와 동일

$c_m$ : color of the point clouds

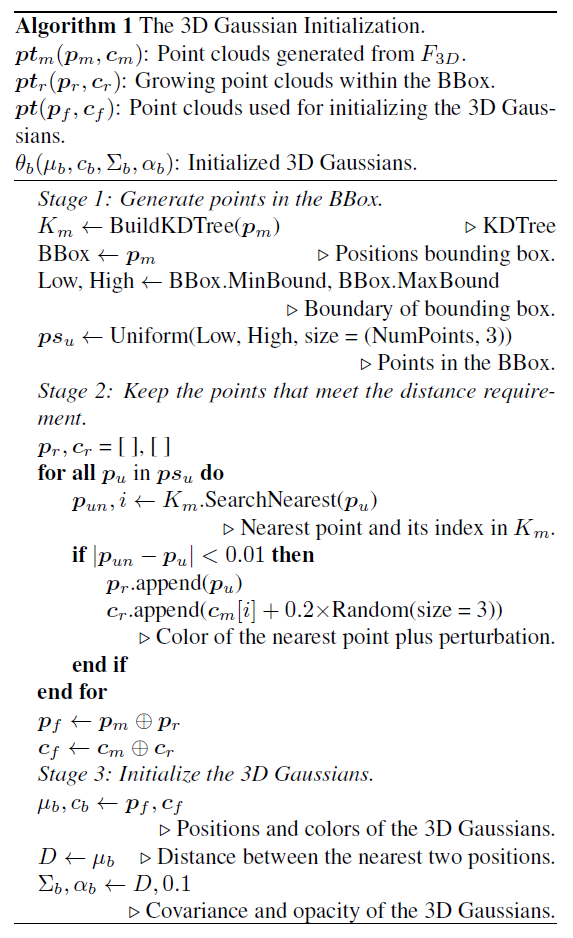

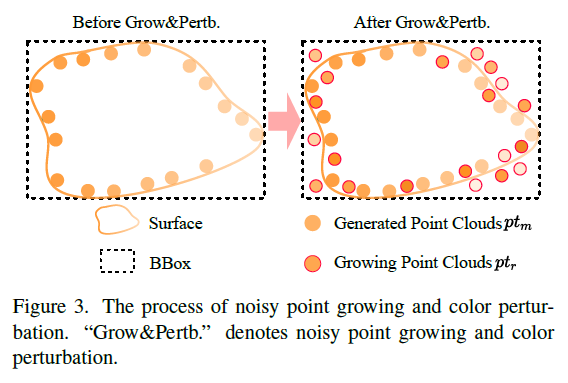

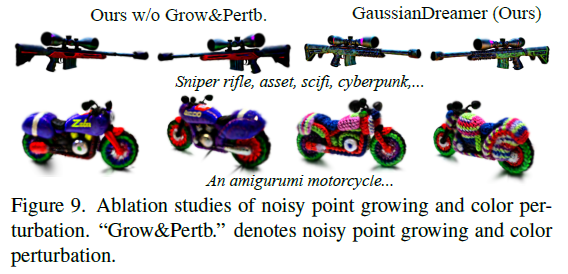

Noisy Point Growing and Color Perturbation

위에서 생성한 $pt_m$을 기반으로 3D Gaussian 초기값으로 사용하지 않음

초기화 성능 향상을 위해 noisy point growing과 color perturbation 진행

$pt_m$의 surface Bounding box 계산

uniformly point cloud grow 진행 $pt_r$

이때 사용하는 것은 KDTree

최근접 point 생성 & normalized distance 0.01 내 point 선택

색상은 근접 색상의 값 + perturbation 진행 (0~0.2)

merge the positions and colors of $pt_m$ and $pt_r$ to obtain the final point clouds.

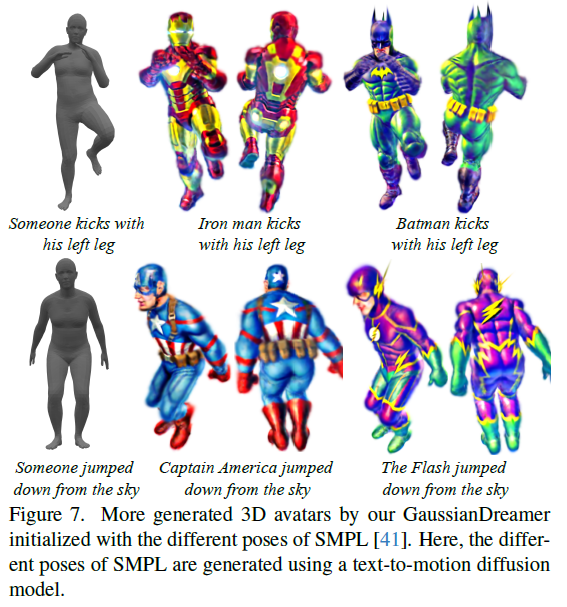

(Optional) 3.3.2 Text-to-Motion Diffusion Model

text 기반 human body motion sequence 생성을 위해 진행하는 단계

human pose keypoint을 triangle mesh m으로 표기한 SMPL model로 변환

mesh -> point clouds $pt_m$

point cloud $pt$ 기반 3D Gaussian 초기화

opacity $\alpha_b$ = 0.1

covariance $\sum_b$ : 근접 2 점 사이 distance 계산

3.4 Optimization with 3D diffusion model

2D diffusion model $F_{2D}$ SDS (Score Distillation Sampling) loss을 통한 3D Gaussian $\theta_b$ optimize

4. Experiments

ThreeStudio, PyTorch

3D diffusion model : Shape-E, MDM

2D diffusion model : stabilityai/stable-diffusion-2-1-base

3D Gaussian

- learning rate opacity, position $10^{-2}, 5 \times 10^{-5}$

- 기타 잡 변수들 정리는 알아서

Training Iteration : 1200

15 min

single RTX 3090

512x512 -> 1024x1024

4.5. Limitation

항상 잘 나오지 않음.

not sharp

multi-face problems

큰 규모 장면 생성의 어려움 e.g indoor scenes